Azure Machine Learning’s “oracle”1 was Bing. The thinking went: if Bing was doing the most advanced machine learning at the company, then by building something Bing could use, we’d build something everyone would need in a few years. One of our learnings from Bing was that data scientists couldn’t use Docker. In fact, for a long time, Bing’s data scientists didn’t even write code, instead using a graphical user interface called “TLC” (The Learning Code)2 to build logistic regression models and run hyperparameter sweeps.

When we finally convinced ourselves that maybe data scientists were starting to prefer Python to GUIs, a profusion of Azure ML-specific nouns were born. “God forbid we let data scientists know we run Kubernetes jobs with their training scripts and store outputs in Azure Blob Storage! Instead, let’s have them run Experiments using Datasets and Environments that push results into a Model store!”

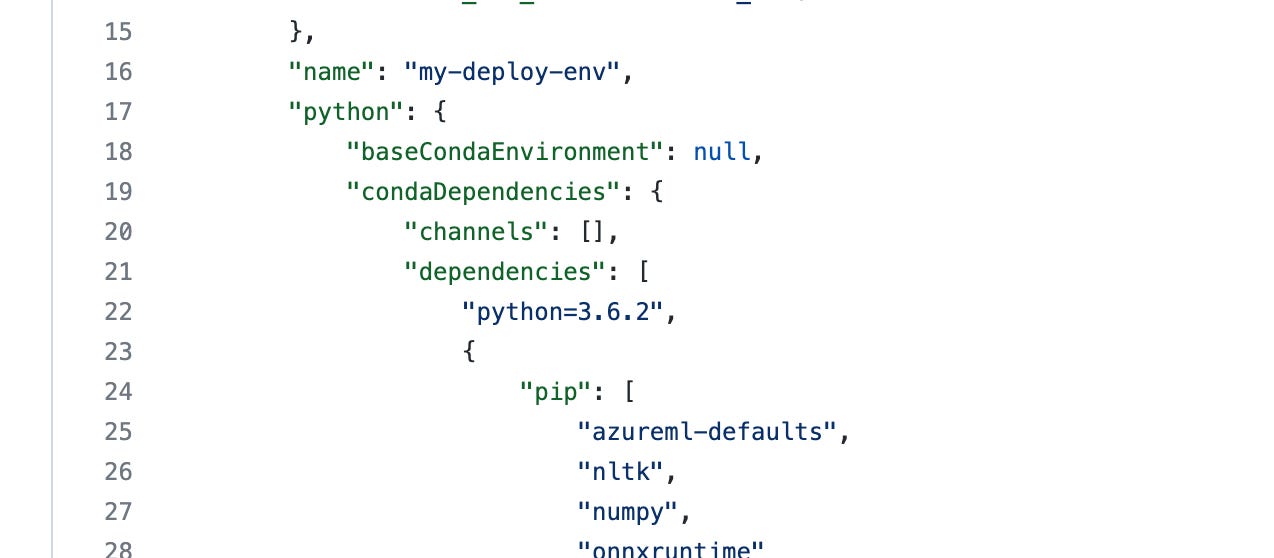

And my personal favorite: the Environment. Environments are just Docker containers with some extra ceremony. Over time, the ceremony grew. We added:

a pip_packages parameter, for customers to specify pip packages they needed installed

a conda_dependencies parameter, for customers averse to pip to define a conda environment they needed installed

an extra_dockerfile_steps parameter, for customers to define arbitrary docker steps to be run during build

a base_image parameter, for customers to specify which base image to build the environment from

a ports field, (if serving) for customer to specify which ports the container used for liveness and readiness probes

Our abstraction got so leaky that we ended up rebuilding nearly every feature that was already in Docker. Rather than simplifying things, we were forcing data scientists to learn Docker and learn how to map our supposedly “easier” syntax onto Docker concepts. In trying to build an easier experience, we’d actually made an incredibly frustrating one.

While I have the utmost respect for the Bing team’s accomplishments with data science, Bing’s history is not a useful roadmap. Data scientists of today are code-first creatures who are unafraid of complexity. I just spoke to a fintech startup where almost a third of the data science team are Certified Kubernetes Administrators.

While that example is definitely a bit extreme, ML platforms serving the next generation of data scientists need to think carefully about what we abstract. Are we providing narrow, deep functionality that solves hard problems, or are we providing so much flexibility that we might as well not exist?

in the Greek sense, not the Larry Ellison sense

Somehow we never made a big deal of the fact that this also stood for “tender loving care,” but that must have been intentional… right?